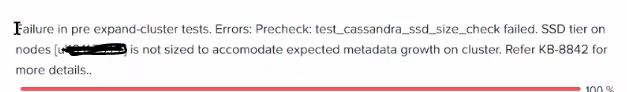

Recently, When I was doing expansion for a Nutanix cluster,I faced SSD size error.even though the new node was matching the existing nodes in the cluster,but still due to the error I cloud not join node to the cluster.

In this post I will illustrate the issue and obviously how to fix it.

The Issue:

When we are going to expand the cluster nodes, a set of test takes place and one of them is cassandra_ssd_size check, which if it does not match the expected value it will fail and it will not allow us to expand the cluster.

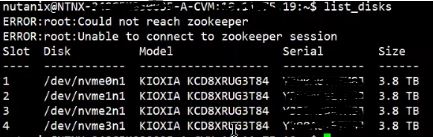

so I have checked the node in the cluster and confirmed that the capacity of the SSD (nvme since it is an all flash node) is matching the the number of SSD in the Cluster,list of disk can be seen in the below:

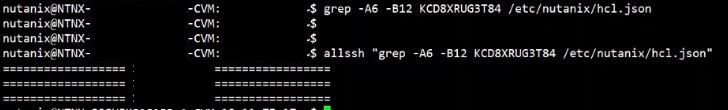

next step was to confirm if the disk model is available in hcl file on the new node and cluster node(s)

on the exisiting nodes in the cluster the disk model is not showing in the hcl file as you can see in the below:

when checking the last edit time of the hcl.json , we found that it is an old file.CVM#cat /etc/nutanix/hcl.json | (head ; tail) | grep -C 1 last_edit

cvm#date --date@<vaulueOfAbove>

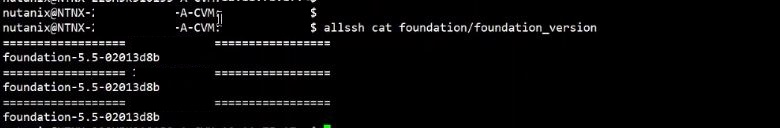

but since the foundation version is up-to-date on the cluster, that means that the above file is not up-to-date and we need to manually replace it

Every time we upgrade the foundation, the hcl file gets updated from the foundation and we can find a copy of the hcl.json in cvm#less foundation/lib/foundation-platforms/hcl.json

now if you do a simple cat|grep against above file with the model of the nvme on the new node, you should get matches.

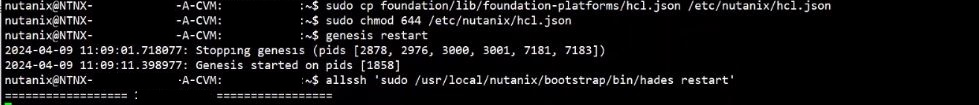

Once that is confirmed.we need to copy the file and restart genesis on the current node and restart hades service on the cluster level,this will automatically copy the new hcl file to other nodes:

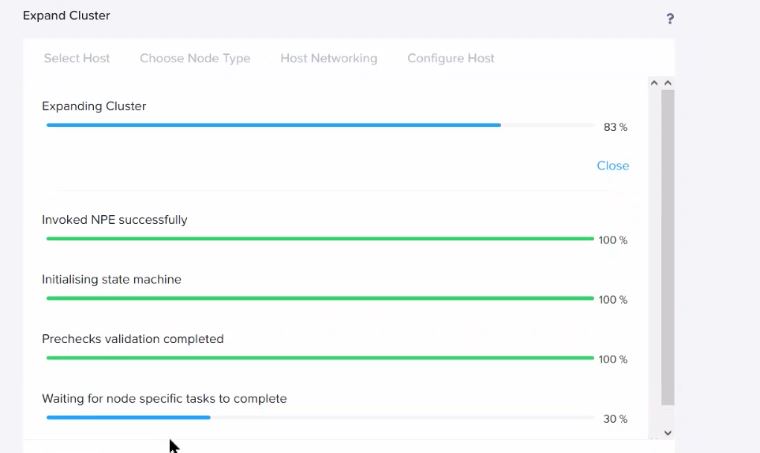

once the above is succeeded, we can try again to add the new node to the cluster and this time you should not get the error (at least not the same error!)

Cheers,